Rank One delivered another impressive performance in the latest iteration of the NIST FRVT Ongoing benchmark. This report included the ROC SDK v1.18 (listed as “rankone-006” in the report), with the following metrics:

| ROC SDK v1.18 rankone-006 2019-02-27 |

ROC SDK v1.17 rankone-005 2018-10-09 |

|

| Accuracy | ||

| Visa (FNMR @ FMR =10-4) | 0.007 | 0.010 |

| Mugshot (FNMR @ FMR =10-5) | 0.012 | 0.017 |

| Wild (FNMR @ FMR =0.005) | 0.035 | 0.051 |

| Efficiency | ||

| Enrollment Speed | 210 ms | 71 ms |

| Template Size | 165 bytes | 133 bytes |

| Comparison Speed | 443 ns | 403 ns |

While nearly every vendor had gaps in their algorithmic performance, with either a difficulty achieving low error rates on both constrained and unconstrained datasets, or with cumbersome computational requirements, the Rank One algorithm delivered impressive results across the board, without a single performance deficiency.

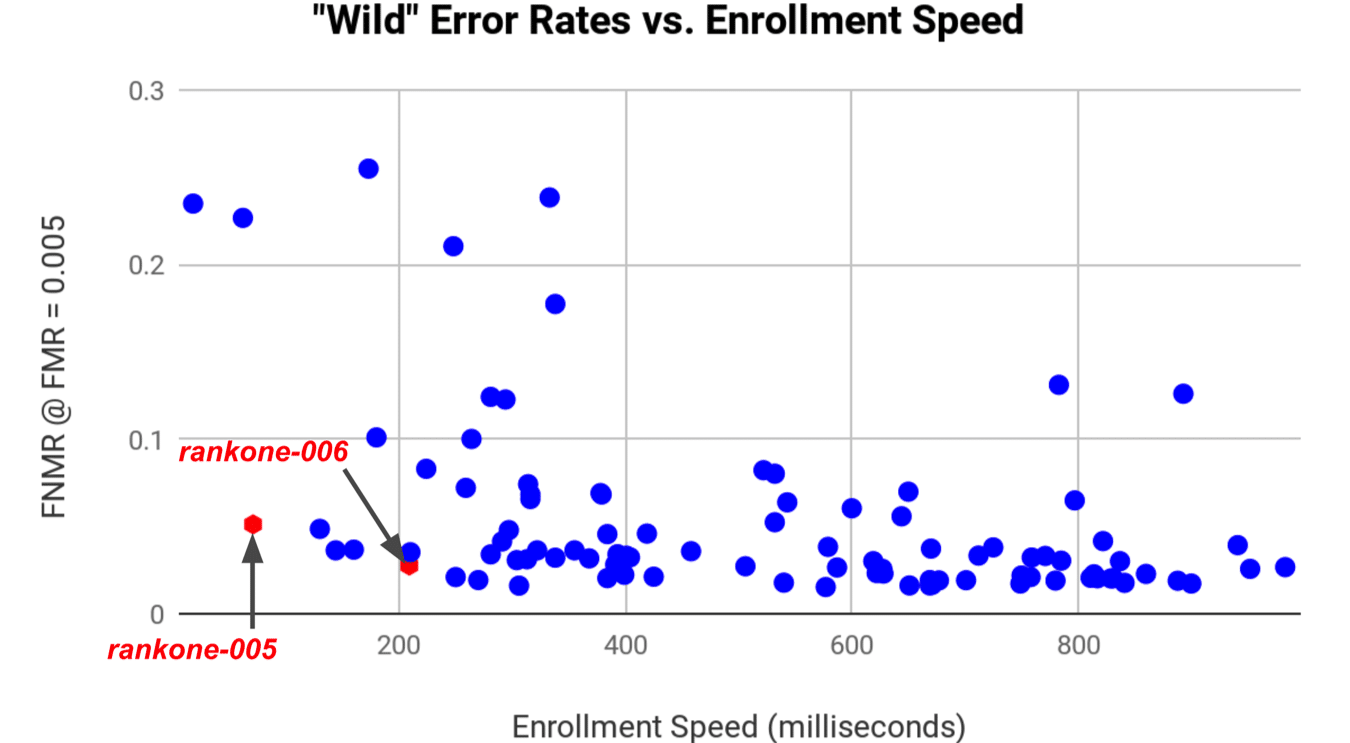

Illustrating this point, consider the following plot of error rate versus enrollment speed on the Wild dataset:

Amongst the most accurate algorithms, there is a substantial difference in enrollment speeds while only a minimal difference in error rates. In that regard, “rankone-006” offers a tremendous capability to system integrators by delivering significant accuracy at a fraction of the computational resources.

Rank One improved accuracy on the constrained datasets to a similar degree as expected. Rank One continues to be one of the best performers on the Mugshot dataset, and on this set Rank One has one of the lowest differences in accuracy between “White” and “Black” races. The performance on Visa imagery continued to improve as well, helped by strong improvements on the “Asian” race and one of the most balanced accuracies across countries of origin.

The improvement on the Wild dataset, while a substantial 1.45x reduction in error rate, did not match the 2.0x reduction measured on many of Rank One’s internal datasets. Unfortunately NIST does not provide meaningful information regarding the contents of this Wild dataset, making it hard to understand the nature of this difference. In the past, the Wild dataset was the IARPA Janus celebrity dataset. It is not clear if NIST is still using celebrity or other publicly available imagery for their unconstrained benchmark, which would result in biased accuracy measurements. It is also not clear how the data is distributed across pose, which is helpful to know when configuring and diagnosing system performance.

The rate of vendor improvement is another critical factor. Given the effort involved in switching vendors, the fast-paced face recognition industry is as much about where a vendor will be a year from now as where it is today. On this consideration, there were 23 vendors, including Rank One, who submitted an algorithm to both the current April 2019 report and to the previous November 2018 report. The following shows the median improvement by vendor:

| Dataset | Median Vendor Error Rate Reduction | Median Vendor Error Rate Reduction |

| Mugshot | 1.43x | 1.11x |

| Wild | 1.46x | 1.61x |

| Visa | 1.43x | 1.21x |

While Rank One’s Wild accuracy gain was not as large as the 2.0x improvement measured on internal datasets, which closely align with our end-users unconstrained applications, the team has already made key improvements for the pending ROC SDK v1.19 which should reflect better on the current NIST Wild dataset.

Of course, while the whole vendor community is improving at an impressive pace, these gains are lost on users whose do not receive access to such improvements, or who are using algorithms that are too slow to easily update.

Moving forward, note that Rank One’s next ROC SDK release, version 1.19, algorithm will be submitted to the June FRVT Ongoing, FRVT Quality, and FRVT 1:N benchmarks. The v1.20 algorithm will likely be submitted to the subsequent FRVT Ongoing benchmark. Rank One will continue to provide complete transparency in the correspondence between SDK versions and FRVT submissions, which is critical information for users and integrators who make procurement decisions based on these reports.