There is a misconception that face recognition algorithms do not work on persons of color, or are otherwise inaccurate in general. This is not true.

The truth is that across a wide range of applications, modern face recognition algorithms achieve remarkably high accuracy on all races, and accuracy continues to improve at an exponential rate.

The most comprehensive industry standard source for validating a face recognition algorithm is the U.S. National Institute of Standards and Technology (NIST) Face Recognition Vendor Test (FRVT). For two decades, this program has benchmarked the accuracy of the leading commercially-available face recognition algorithms.

Due to the rapid progression of face recognition technology in recent years, NIST FRVT introduced the “Ongoing” benchmark program, which is performed every few months on a rolling basis. FRVT Ongoing measures identity verification accuracy across millions of people and images, with wide variations in image capture conditions (constrained vs. unconstrained), and person demographics (age, gender, race, national origin).

One dataset analyzed in depth in the NIST FRVT Ongoing benchmark is the Mugshot dataset. Performance reported on this dataset includes accuracy measurements on over one million images and persons, including accuracy breakouts across the following four demographic cohorts: Male Black, Male White, Female Black, and Female White.

In terms of overall face recognition accuracy, leading algorithms are extremely accurate on the Mugshot dataset. The top performing algorithm identified faces at 99.64% accuracy for True Positive / Same Person comparisons and 99.999% accuracy for False Positive / Different Person comparisons. There are another 50 different algorithms benchmarked that identified faces with at least an accuracy of 98.75% for Same Person comparisons and 99.999% accuracy for Different Person comparisons.

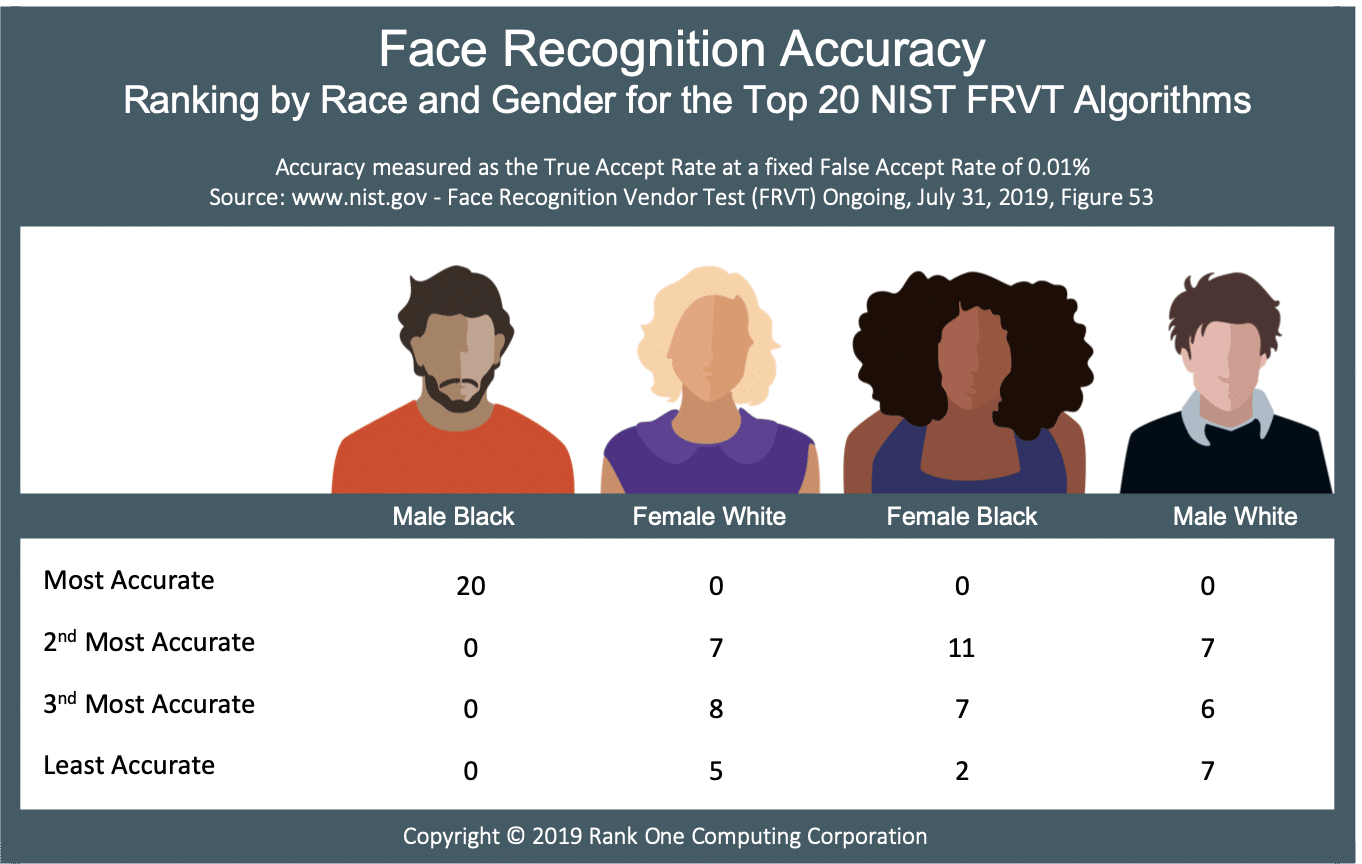

In terms of accuracy breakouts across the four race and gender cohorts, all of the top 20 algorithms are found to be the most accurate on Male Black subjects.

The following score chart breaks down the accuracy rank for each of the four cohorts across the top 20 algorithms:

As shown in the above tally, Male Black was the most accurate demographic cohort for the top 20 most accurate algorithms analyzed by NIST. While this is counter to conventional wisdom and the media’s narrative, the results are not particularly surprising. Here is why:

Face recognition algorithms are highly accurate on all races.

For the above 20 algorithms, the median difference between the most accurate and least accurate cohort for a given algorithm was only 0.3%.

Academic institutions have been publicizing non-academic research.

The widespread belief that Race significantly biases face recognition accuracy is due to a non-peer reviewed investigative journalism article from Georgetown Law titled “The Perpetual Line-Up: Unregulated Police Face Recognition in America”. The sole source for the claim on racial biases was a peer-reviewed article written by myself and colleagues in 2012. This article was incomplete on the subject and not sufficient for being the sole source cited, as it indirectly has been since the publishing of the Georgetown report.

Another common source cited for the inaccuracy on persons of color is from a study performed by the MIT Media Lab, which did not measure face recognition accuracy. In the “Gender Shades project”, the accuracy of detecting a person’s gender (as opposed to recognizing their identity) was measured. Two of the three algorithms studied were developed in China, and had poor accuracy at predicting the gender of the Female Black cohort. Still, this study has been widely cited as an example of face recognition being inaccurate on persons of color. Again, this study did not measure face recognition accuracy.

Fast forward, and there has been a recent campaign to ban face recognition applications outright, regardless of their purpose and societal value. These initiatives are often premised on the claim that the algorithms are inaccurate on persons of color, which, as we have shown, is not true.

A path forward

Given the disproportionate impact of the criminal justice system on Black persons in the United States, the concern regarding whether a person’s race could impact a technology’s ability to function properly is valid and important. However, the current dialogue and public perception has left out a lot of key factual information, and has further confused the public regarding how face recognition is used by law enforcement.

In addition to the public being misled on the accuracy of FR algorithms with respect to a person’s race, the public has even been misled as to how law enforcement uses face recognition technology, as clarified in a recent article. In turn, cities like San Francisco have used such misinformation to compromise the safety of their constituents.

It is not in our nation’s interest to decide public policy based on politically motivated articles with weak scientific underpinnings. The benchmarks provided by NIST FRVT are currently the only reliable public source on face recognition accuracy as a function of race, and according to these benchmarks, all top-tier face recognition algorithms operating under certain conditions are highly accurate on both Black and White (as well as Male and Female).

–

Like this article? Subscribe to our blog or follow us on LinkedIn or Twitter to stay up to date on future articles.

Popular articles: